The slogan “Stop Killer Robots” feels like something that even our currently fractious society could maaaybe find agreement on. However much we dislike each other, we are still chauvinists for the species—no one is in favor of Skynet and Terminators, are they? Yet, while “Stop Killer Robots” is a memorable phrase, it is a little cheeky, and that irreverence almost dissuades audiences from further inquiry—after all, there isn’t a need to stop killer robots…is there?

Evidently, there is! In what is a surprise to me (but definitely shouldn’t have been a surprise), concurrent to Big Tech’s phenomenal but seemingly frivolous advances in Artificial Intelligence such as: mastering all our board games, writing absurdist film scripts, or manipulating the world’s collective free attention with a video app (okay maybe that one isn’t so frivolous), there is also a global effort to develop military applications for AI, inevitably leading to a future where lethal force will be exercised without human input.

Cultivating awareness of this development and marshaling resistance is the goal of the advocacy organization Stop Killer Robots which commissioned today’s film pick from director Matthew Harmer and the London-based creative agency, Nice and Serious.

Sci-Fi Author: In my book I invented the Torment Nexus as a cautionary tale

— Alex Blechman (@AlexBlechman) November 8, 2021

Tech Company: At long last, we have created the Torment Nexus from classic sci-fi novel Don’t Create The Torment Nexus

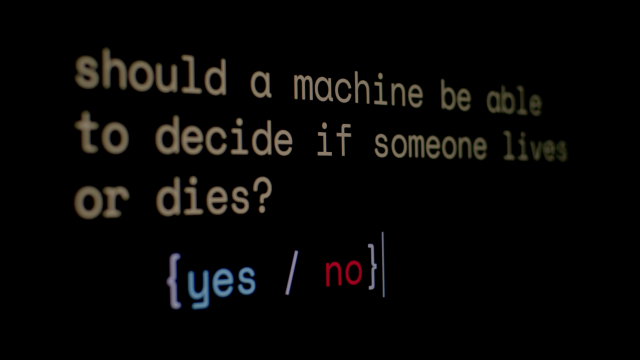

Immoral Code is thus an “issue-doc” and its beginning suggests the type of film I’m left cold by. The premise is suitably interesting, and a degree of explication of the issue and its context is required, however, the deployment of “talking heads” to intone with grave seriousness about “imminent danger” is not a style of documentary filmmaking that resonates with me. Stick with it however, as the film quickly introduces new modes of storytelling to complement this traditional approach. In particular, I found the decision to invite normal citizens into the film via a process that looks somewhat like a focus group to be simply ingenious. Termed a “Social Study” by the creative team, individuals, sometimes paired with a friend or family member, are posed questions of escalating moral complexity by a computer screen in a crude approximation of an AI algorithm’s decision tree. The camera captures their candid reactions, as they process and verbalize their reactions in an impromptu philosophical inquiry.

These sequences were at the core of the pitch by the Nice and Serious creative development duo of Rickey Welch and Serafima Serafimova (disclosure: Serafima is a writer/curator on the Short of the Week team as well), and while they are augmented by expert interviews and pleasingly well-thought-out b-roll across the film’s 23min runtime, they comprise the film’s essential appeal. Documentaries that capture the candid reactions of ordinary people are a small but notable sub-genre and Immoral Code stirs fond memories for me of previous hits like Topaz Andizes’ {The And} series, Talia Pileva’s viral hit First Kiss, or the film festival favorite 10 Meter Tower.

But past the novelty, the Social Study approach is a perfect fit for the aims of the film. AI is an abstract concept and while experts can discuss its implications in a way that sounds like motives, it is not, ultimately, a character. Its coding is not relatable to a lay audience, so its “thinking” is foreign to our experience. Immoral Code is a piece of advocacy, it is meant to be a polemic, but is lacking an essential narrative component—an antagonist.

Subtle design and off-angle framing work to “personify” the algorithm.

The social study functions as a runaround to this essential lack. On the one hand, the spartan computer screen almost does the trick of “personifying” AI. The slow readout of the questions and the dry logic of its phrasings make it seem like there is a back and forth between an alien intelligence and the human subjects. The photography and editing imperceptibly contribute to a sense of menace behind the screen, drawing off of years of cinematic tricks in the wake of HAL 9000.

However, the study also shifts the emphasis away from the impersonal algorithm, dramatizing, rather than simply asserting why the core moral dilemma is so difficult. To see humans grappling with these questions of life or death demonstrates their complexity in a visceral way, highlighting their essential subjectivity. Intuitive values are expressed and held up for scrutiny. It is hard enough to even recognize them as such, how could one ever “code” them?

Above it all, what I like about the approach of Immoral Code is that its format fosters audience interaction. The single biggest criticism I have of issue-docs, and the biggest conundrum for the activists behind them is that they lecture at viewers, instilling a passivity that makes it hard to intellectually engage them, or subsequently shift them towards action. The format of Immoral Code provides an opening for viewers to participate in the same manner as the film’s subjects and that is something more films of this type should aspire to emulate.

Jason Sondhi

Jason Sondhi